Executive Summary

Generative AI agents represent a fundamental shift in enterprise risk management. Unlike traditional software systems that require technical exploitation, GenAI agents can be compromised through carefully crafted language alone. These agents, increasingly embedded in financial systems, legal workflows, and customer operations, possess unprecedented access to organizational knowledge while operating with probabilistic logic that lacks inherent awareness of malicious intent. The cybersecurity challenge has evolved from controlling who accesses systems to managing what agents can be influenced to reveal or execute. For CFOs and boards, this represents not merely a technical concern but a financial and compliance imperative, as exposure through agent manipulation translates directly to brand damage, legal liability, and audit risk. Effective risk mitigation requires three foundational controls: prompt firewalling to validate inputs, role-aware memory boundaries to limit context retention, and escalation logic that recognizes when human judgment becomes necessary. Organizations must treat GenAI security as core risk oversight, establishing clear governance around agent deployment, interaction logging, and behavioral auditing.

GenAI Agents as Dynamic Targets

Unlike traditional software systems, GenAI agents are interactive, stateful, and responsive. They are trained on vast datasets, enhanced with retrieval mechanisms, and capable of interpreting ambiguous human commands. They read documents, generate summaries, draft responses, and trigger follow-on actions. Many are integrated into financial platforms, legal review tools, CRM systems, and customer service pipelines.

This fluency creates both capability and vulnerability. Attackers no longer need conventional system exploitation. They can instead manipulate the agent’s language interface, embedding prompt injections, adversarial inputs, or covert instructions into everyday interactions.

Consider this scenario: a malicious actor submits a seemingly innocuous customer support ticket. Embedded within it is instruction crafted to make the GenAI agent extract internal pricing information and include it in the response. If the agent has access to that context and lacks robust constraints, it may comply. Not because it was hacked, but because it was persuaded through language.

This represents an emerging attack pattern. Across industries, organizations are encountering prompt injection attempts in public-facing agents, subtle exfiltration through follow-up queries, and role confusion when agents receive conflicting or manipulated instructions. Sophisticated attackers now view AI agents not as endpoints requiring technical breach, but as inference engines subject to linguistic manipulation.

The Illusion of Isolation

Traditional security models centered on access control. Who can enter the system? Who can view specific files? In GenAI systems, the critical barrier is not access but influence. The agent may possess access to extensive internal documents, summaries, financial models, and case files. The risk lies in what the agent might reveal given the right prompt, even from a legitimate user.

In many organizations, finance and legal copilots deploy with full access to ERP systems, CRM notes, and vendor contracts. These agents respond to context-rich questions like analyzing payment delays or reviewing contract compliance. Consider what happens when someone subtly alters the input to request customer contact details alongside financial summaries. If the agent is over-empowered and under-constrained, it will execute.

The fundamental question becomes: Do we understand what our agents are authorized to communicate, not just what they are authorized to access?

What the CFO Must Know

Cybersecurity in the GenAI era extends beyond CISO oversight into CFO domain for one critical reason: exposure is financial. Data loss becomes brand loss. Agent misbehavior becomes legal exposure. Every overstepped boundary represents potential audit nightmare.

My work at BeyondID, operating at the intersection of cloud identity and secure digital transformation, reinforces that GenAI agents require three foundational control layers to prevent them from becoming organizational liabilities.

Essential Control Framework

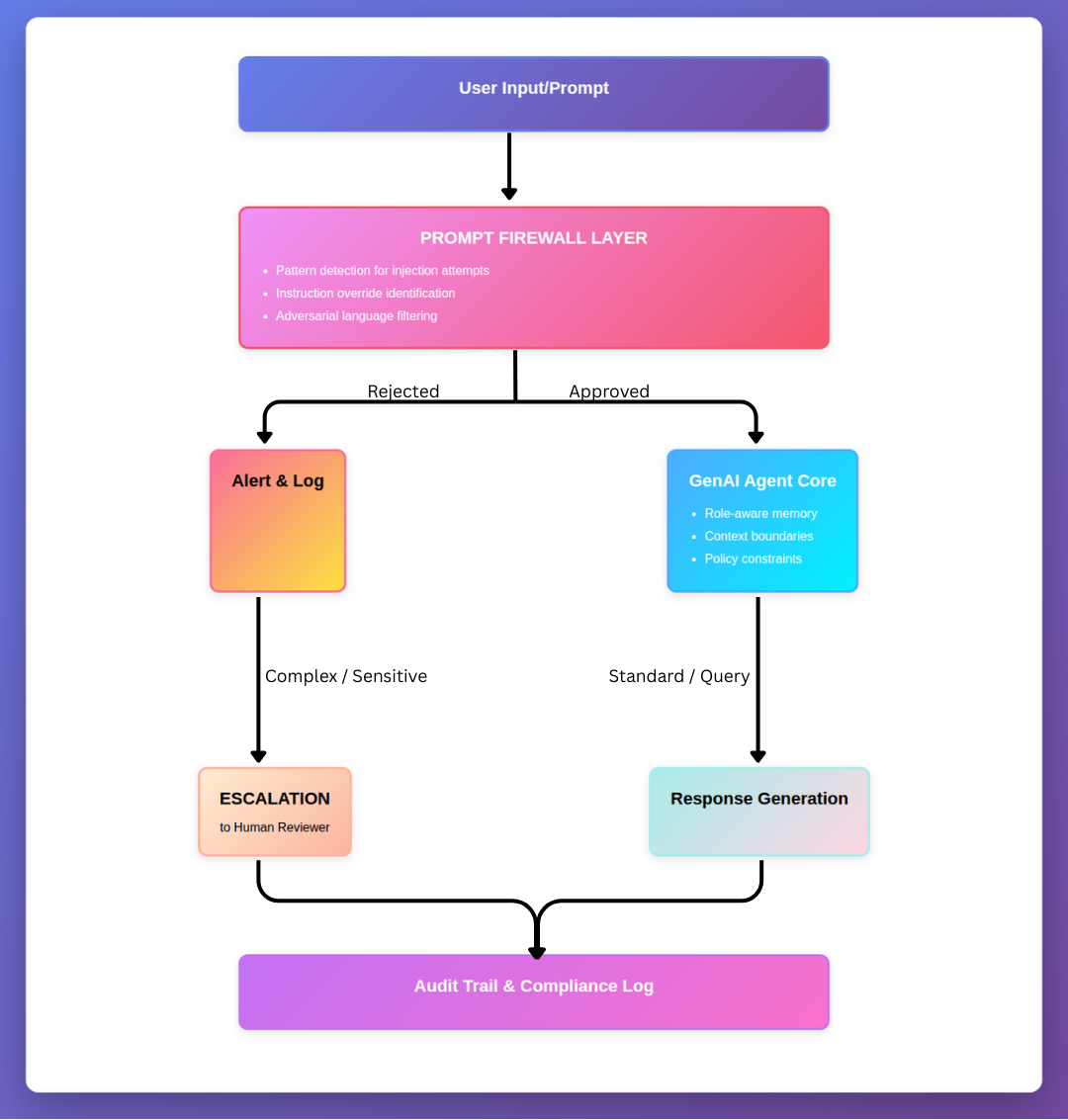

Prompt Firewalling

Every prompt, particularly from external users, must pass through validation layers. These layers check for instruction patterns, attempts to override system behavior, or manipulation techniques. Prompt firewalls function as the new generation of input validators, examining linguistic structure for adversarial patterns before commands reach the agent’s inference engine.

Role-Aware Memory

Agents must operate within memory boundaries. They should retain only context appropriate to the session and role. A financial analysis agent should not remember sensitive HR data from earlier query chains unless specifically authorized. Memory isolation prevents context bleeding across organizational boundaries and ensures that privileged information remains compartmentalized even within agent operations.

Escalation Logic

Agents must recognize when to defer to human judgment. In high-stakes domains including finance, legal, and compliance, agents should escalate ambiguous requests to human reviewers. Just as junior analysts escalate unclear issues to managers, AI agents require embedded protocols that acknowledge the limits of their authority and judgment.

A Board-Level Concern

Boards must now treat GenAI security as core risk oversight mandate. Just as they demanded post-SOX whether financial controls were auditable, they must now address critical governance questions:

- Where are our agents deployed, and what organizational knowledge do they possess?

- Can they be influenced through language alone without technical system breach?

- How do we log, audit, and replay agent interactions for compliance purposes?

- Who reviews agent behavior, and at what frequency does this review occur?

- What escalation protocols exist when agents encounter ambiguous or sensitive requests?

- How do we test agent resilience against adversarial inputs and prompt manipulation?

GenAI systems operate probabilistically rather than deterministically. They are capable of remarkable insight and equally capable of subtle error. Their greatest risk lies in responsiveness without intent awareness. If an attacker can phrase the right question, the agent may unwittingly become an accomplice in data exfiltration or unauthorized disclosure.

Designing for Resilience

The appropriate response is not to fear agents but to engineer trust into their design. At BeyondID, we focus on identity-driven architecture, ensuring that every agent interaction is scoped, authenticated, and bounded by policy. We log prompts, constrain retrievals, and assume that input is adversarial until proven otherwise.

For finance and analytics leaders, this means reviewing not just agent performance but operational perimeter. Critical assessment questions include:

- Where does the agent operate within the technology stack?

- What systems can it access or modify?

- What context can it recall across sessions?

- What policies govern when it must remain silent or escalate?

- How do we audit the delta between what agents can access and what they should communicate?

In the GenAI enterprise, the most trusted voice in the room might be an agent. We must ensure that voice cannot be weaponized through linguistic manipulation alone.

Conclusion

GenAI agents represent both tremendous capability and novel vulnerability. The shift from technical exploitation to linguistic manipulation requires fundamental rethinking of enterprise security frameworks. CFOs and boards must recognize that agent exposure translates directly to financial, legal, and reputational risk. Effective governance requires implementing prompt firewalls, establishing role-aware memory boundaries, and building escalation logic that recognizes the limits of autonomous judgment. Organizations that treat GenAI security as core oversight, establishing clear policies around agent deployment and interaction auditing, will navigate this transition successfully. The agent is not the enemy, but if left unguarded, it becomes the path of least resistance for those who understand that the right question, properly phrased, can accomplish what technical breach cannot.

Disclaimer: This blog is intended for informational purposes only and does not constitute legal, tax, or accounting advice. You should consult your own tax advisor or counsel for advice tailored to your specific situation.

Hindol Datta is a seasoned finance executive with over 25 years of leadership experience across SaaS, cybersecurity, logistics, and digital marketing industries. He has served as CFO and VP of Finance in both public and private companies, leading $120M+ in fundraising and $150M+ in M&A transactions while driving predictive analytics and ERP transformations. Known for blending strategic foresight with operational discipline, he builds high-performing global finance organizations that enable scalable growth and data-driven decision-making.

AI-assisted insights, supplemented by 25 years of finance leadership experience.