Executive Summary

Transformative shifts in enterprise often arrive through changes in assumptions about people rather than flashy new tools. As generative AI and agent-based workflows become intertwined with everyday work, company designers must rethink not just who they hire but how talent and intelligent systems are orchestrated together. The AI-native firm should measure talent in terms of Full Learning Equivalents, the ability of the organization to cultivate systems that learn, adapt, and improve rather than simple headcount. Traditional org charts emphasize hierarchy and siloed workflows. The agent economy requires blending these silos into intelligence nodes that orchestrate humans and machines. New roles become essential: Learning Engineer, Prompt Architect, Agent Supervisor, Ethical AI Advocate, and Metrics Librarian. Performance evaluation must focus on how human roles amplify intelligence, measured through error reduction and intervention rate rather than output volumetrics. The question is not how many you hire but how much your organization can learn and adapt.

From FTE to FLE: Rethinking Capacity

Long gone is the era when full-time equivalents simply measured capacity. The AI-native firm should measure its talent in terms of Full Learning Equivalents, the ability of the organization to cultivate systems that learn, adapt, and improve. When agents replace routine tasks, headcount loses meaning. What matters is how much humans contribute to the model’s intelligence. The human data steward who trims hallucinations or the prompt engineer who sharpens forecasting logic is not just filling a seat. They are directing the learning engine.

Hiring must therefore shift from capacity-building to learning-building. Look for profiles that elevate the system: can they raise forecast accuracy, reduce contract review time, or improve quality metrics? These individuals are not just analysts. They are intelligence multipliers. Measuring structure in FLEs encourages founders to ask not how many people do we have but how much smarter do our models get when people work.

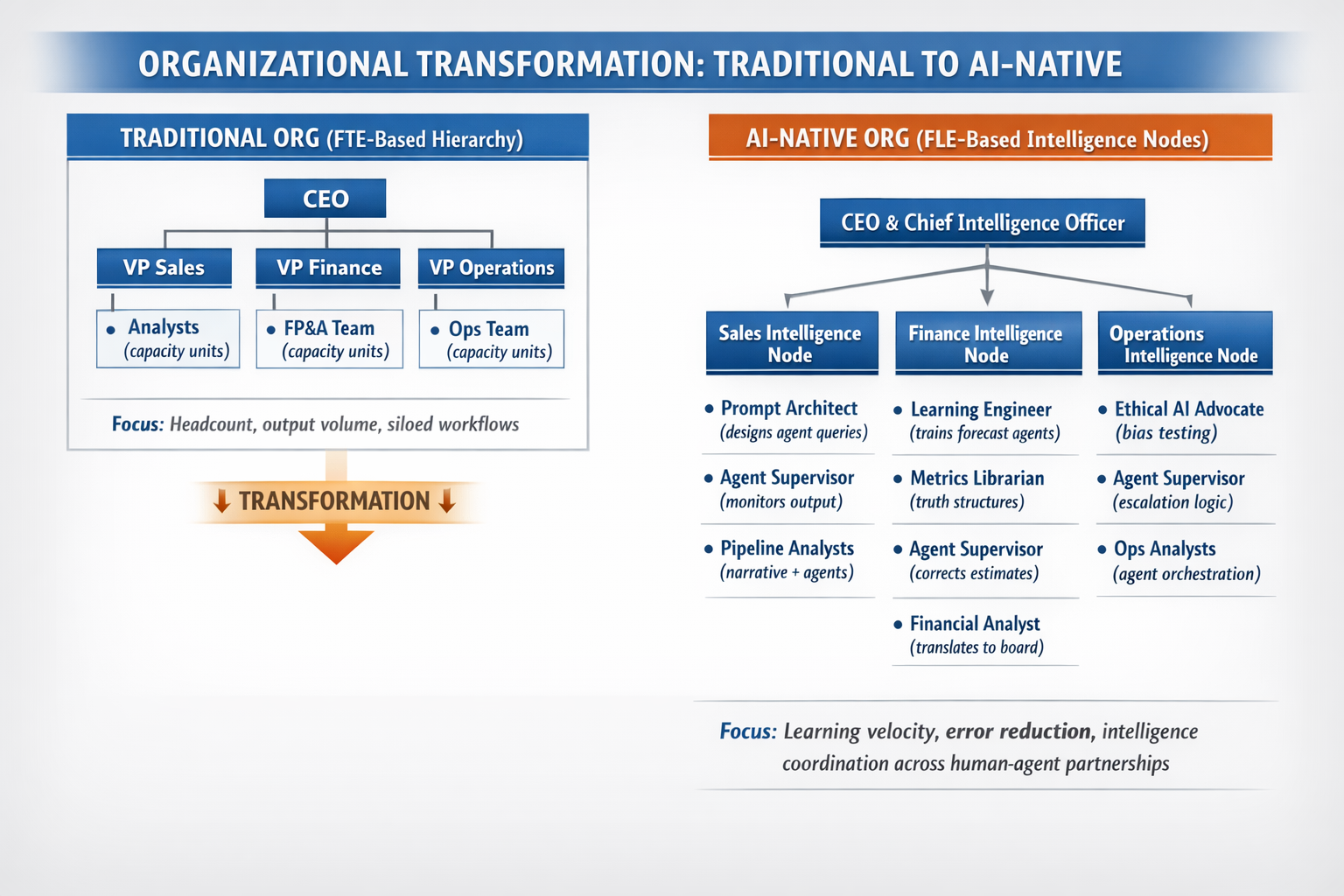

Org Charts Become Intelligence Nodes

Traditional org charts emphasize hierarchy and siloed workflows. Sales follows marketing. Finance follows operations. The agent economy requires blending these silos into intelligence nodes, centers of coordination that orchestrate humans and machines alike. These nodes can live within teams: a prompt architect embedded in sales, an agent supervisor in finance, or a learning engineer in product.

From Hierarchy to Intelligence Coordination

This diagram illustrates the structural shift from traditional FTE-based hierarchies to AI-native intelligence nodes. In the traditional model, org charts measure capacity through headcount and silo functions into separate reporting lines. In the AI-native model, org charts measure learning velocity through Full Learning Equivalents and organize around intelligence coordination, with specialized roles embedded across nodes to orchestrate human-agent collaboration. Each intelligence node contains a mix of prompt architects, agent supervisors, learning engineers, and analysts who work together to amplify the intelligence of both human and synthetic systems.

Essential AI-Native Roles

In the AI economy, new roles become essential. These roles are not optional. They define the intelligence fabric of the company:

- Learning Engineer: Bridges data, model training, and operations pipelines. Defines retraining frequency, feedback loops, and pipeline injections. Tracks model drift and ensures agent retrievability.

- Prompt Architect: Designs query templates and calibrates agent tone, specificity, and failure logic. Engineers prompt logic to prevent hallucination or offensive output. Requires psychological and linguistic insight.

- Agent Supervisor: Monitors confidence thresholds and reviews agent output. Escalates issues, corrects behavior, and files override rationale. Acts as human governance and corrective agent learning partner.

- Ethical AI Advocate: Focuses on fairness, privacy, bias testing, and data compliance. Evaluates agent output for compliance with policies and standards. Designs red teams for adversarial testing.

- Metrics Librarian: Manages definitions and ensures metrics are synchronized across humans and agents. Aligns the system of truth structures for ARR, churn, margin, and other key metrics. Essential for consistency and confidence.

Collectively, these roles ensure that humans guide the learning systems. Each becomes a pillar in the AI-augmented organization.

Measuring Performance Through Learning Yield

Output metrics like reports delivered or number of sales calls are no longer telling. Performance evaluation in an AI-native world must focus on how human roles amplify intelligence. Did forecast error decline? Is agent error rate shrinking? Are prompts converging? People who scaffold intelligent agents must be rewarded for reducing intervention frequency, improving model accuracy, and expanding the range of autonomous decisions.

This demands a framework of learning metrics. Each intelligence specialist should own an improvement bucket: forecasts, contract review, risk detection, or campaign generation. Progress is measured in reduction of error or intervention rate per unit of time, rather than blunt output volumetrics.

Recruiting for Intelligence

Founders must evaluate candidates along two dimensions: learning orientation and orchestration skill. Candidates must show curiosity about model behavior and demonstrate the ability to collaborate across engineering, operations, product, and legal.

Interviews should include real tasks: designing prompts, debugging hallucinations, simulating error detection. Candidates who can improve model performance per unit of input bring capability beyond fill-in-the-blank skills.

Training the AI-Native Organization

Rewriting your org requires training it, not just building it. AI-native organizations demand a new curriculum:

- Prompt literacy: Every employee needs basics on how prompts work, how models think, and when hallucinations occur.

- Human-agent handoffs: Teams must rehearse the moment of intervention and know who fixes flagged issues.

- Security and ethics: Understand prompt injection, data leakage, and the boundaries of sensitive context.

- Agent calibration: Monthly sprint reviews of outliers and model performance. Inspect hallucinations and refine prompts.

These elements must be woven into onboarding from day one and built into quarterly training.

Cultural Norms for Human-Agent Collaboration

Culture shifts when agents collaborate alongside humans. Founders must set norms early. Agents propose, humans decide. Encourage agent output to be shared and reward insights from agent suggestions. Celebrate mistakes. When agents make errors, analyze and iterate. Surface agent craftsmanship through shared prompt libraries, retraining sessions, and metrics curves.

Conclusion

Founders in early-stage companies have an opportunity. Artificial intelligence is not just another feature. It is the foundation on which modern scale can be built. To begin, map workflows and identify where agents can forecast, recommend, triage, or simulate. Define the intelligence insertion points. Build roles. Hire intentionally. Train the organization.

An org chart that fuses talent and cognition is not just a diagram. It becomes a blueprint for scale, insight, and resilience. Headcount will always matter. But in the AI economy, intelligence is the true capacity. Your ability to teach systems is your competitive lever. The question is not how many you hire but how much your organization can learn and adapt.

Disclaimer: This blog is intended for informational purposes only and does not constitute legal, tax, or accounting advice. You should consult your own tax advisor or counsel for advice tailored to your specific situation.

Hindol Datta is a seasoned finance executive with over 25 years of leadership experience across SaaS, cybersecurity, logistics, and digital marketing industries. He has served as CFO and VP of Finance in both public and private companies, leading $120M+ in fundraising and $150M+ in M&A transactions while driving predictive analytics and ERP transformations. Known for blending strategic foresight with operational discipline, he builds high-performing global finance organizations that enable scalable growth and data-driven decision-making.

AI-assisted insights, supplemented by 25 years of finance leadership experience.