Executive Summary

When I reflect on the early days of startup formation, whether sitting around a whiteboard with founders in a SaaS garage or stress-testing product-market fit in a post-seed analytics company, one pattern emerges consistently: great companies are not just well-funded; they are well-framed. They reflect the future they are trying to serve, not the past they are trying to disrupt. In the age of generative AI, the most foundational question for any new venture is no longer “Where does AI fit in?” but rather “What does it mean to be AI-native from day one?” This is not a question of hype-chasing but a question of architecture, team design, data strategy, and product DNA. Being AI-native is about building companies where machine intelligence is not an add-on but the organizing principle of how work is done, decisions are made, and value is created. Having operated across multiple industries spanning gaming, adtech, healthcare, and logistics, I have watched the AI conversation shift from exploratory R&D to core operations. This essay lays out a practical blueprint for founders building AI-native companies from zero. Because in the new economy, intelligence is the infrastructure.

Define What AI-Native Actually Means

At its core, an AI-native startup is one where core workflows are automated or co-piloted by AI agents, data is captured with the explicit intention of learning from it, the product improves with usage without linear human effort, and decisions are increasingly made with model input rather than just heuristics.

This does not mean every feature has to be AI-powered. It means the system itself learns, and customers receive differentiated value because of that learning.

A traditional CRM helps you store contacts. An AI-native CRM learns how your team closes deals and proactively suggests sequences, pricing, or objection-handling logic. A traditional edtech platform hosts content. An AI-native platform dynamically sequences material based on learner behavior and performance. The difference is not just technical but strategic.

Start With the Right Architectural Foundation

If you are building an AI-native company, architecture is your capital multiplier. Poor architecture locks you into brittle tools. Smart architecture compounds learning, speeds deployment, and enables extensibility.

Key architectural elements:

- Unified Data Layer: All inputs including user behavior, logs, documents, and contracts must feed into a common semantic layer that agents can query.

- Promptable System Interfaces: Design every system endpoint so it can respond to natural language or API queries from agents.

- Context Windows: Think of your product as a memory system. Design your architecture to serve context efficiently and in real time.

- Agent Orchestration: Build a system where multiple specialized agents can collaborate, escalate, and defer.

At organizations where I implemented enterprise systems, we learned that foundational architecture decisions determined long-term scalability. The same principle applies to AI-native architecture: early choices compound over time.

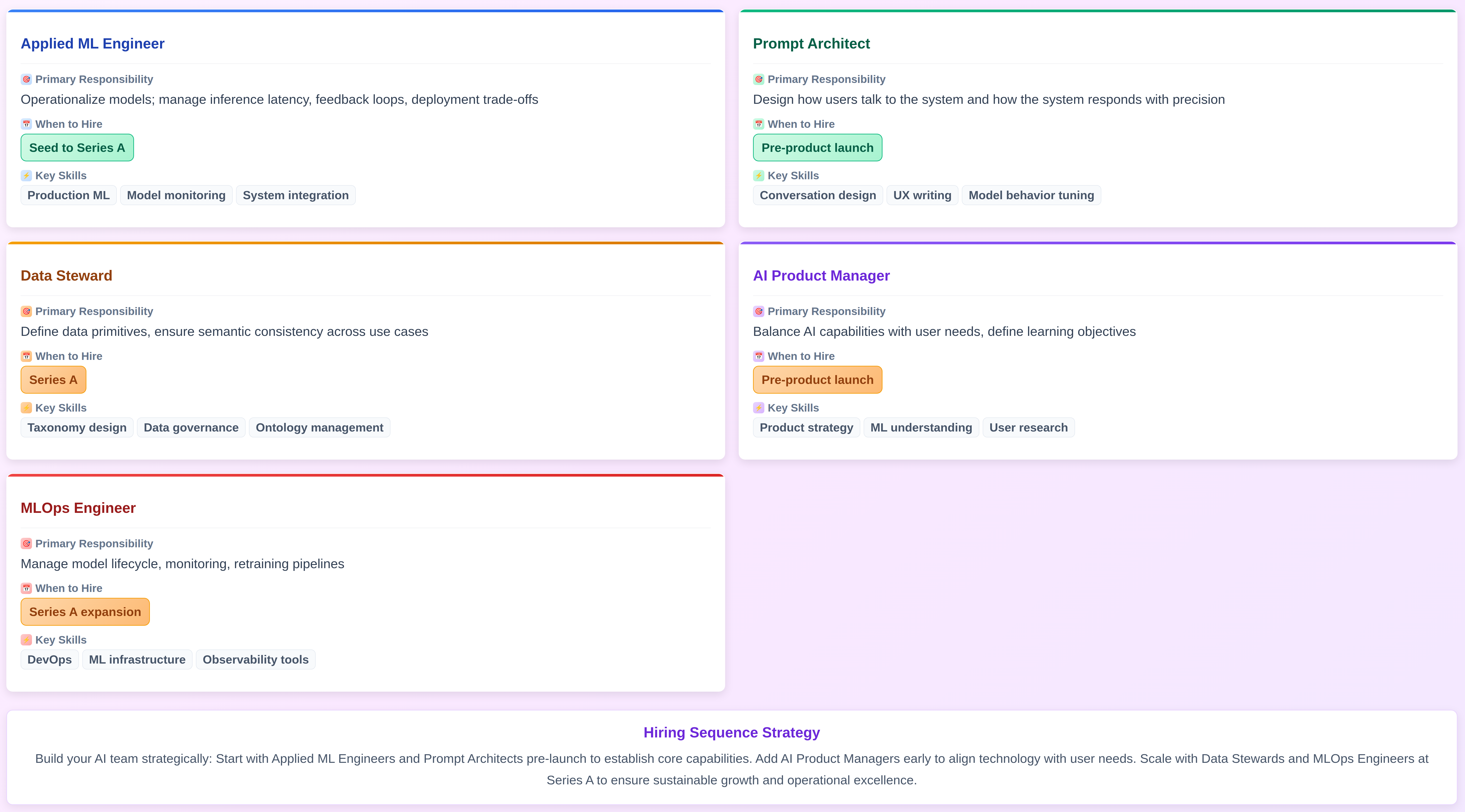

The AI-Native Team Structure

In an AI-native company, your talent stack must mirror your tech stack. It is no longer enough to have engineers and marketers. You need people who understand how systems learn.

At a digital marketing organization that scaled from nine million to one hundred eighty million in revenue, early architectural and talent decisions enabled rapid scaling without major platform rewrites. The same discipline applies to AI-native startups: hire for cognition early, not just code.

Embed Learning Loops into Every Workflow

AI-native companies thrive when every transaction becomes a training example. This requires product thinking that values learnability as much as usability.

Design product experiences that collect labeled feedback through corrections and overrides, track user journeys for inference improvement, surface model uncertainty to allow user correction, and version model responses to avoid drift or regressions.

In one AI-powered revenue operations tool, the feedback loop from user overrides was the single largest driver of model improvement. Every sales suggestion could be edited, and that delta was captured as training data. Within two months, suggestion accuracy rose by twenty-five percent. The product did not just work; it learned.

Build Human-in-the-Loop from the Start

AI fails. It hallucinates. It overconfidently suggests wrong answers. This is not a bug but math. The smartest AI-native founders design their systems with human-in-the-loop (HITL) processes from the beginning.

Effective HITL design ensures users can review, edit, and confirm AI-generated outputs. Systems must transparently show confidence scores or sources. Mistakes should be logged, corrected, and retrained systematically. Escalation paths must exist for edge cases and ambiguous scenarios.

In medical, legal, and finance use cases, this is non-negotiable. But even in B2B tools or creative applications, HITL design increases trust and adoption.

Govern Early, Do Not Apologize Later

One mistake I often see is founders treating AI governance like a compliance checklist. They wait until customers or investors demand it. By then, the cost of change is high.

Smart founders govern early. They document:

- What data is used to train models

- How outputs are generated and validated

- Where AI is embedded and what oversight exists

- How model performance and bias are tested over time

This is not about fear but fluency. Boards, customers, and regulators are asking better questions. The startups with answers will win trust and capital.

Rethink Monetization Around Intelligence

In an AI-native company, you are not just delivering functionality but intelligence at scale. This creates new monetization opportunities including usage-based pricing on agent interactions or predictions, tiered pricing based on model accuracy or explainability features, pay-per-insight models where customers only pay for accepted outputs, and internal efficiency gains monetized as services or intellectual property.

One B2B GenAI startup monetized not on seat count but on “decisions improved.” Customers paid more as the model improved because ROI scaled non-linearly.

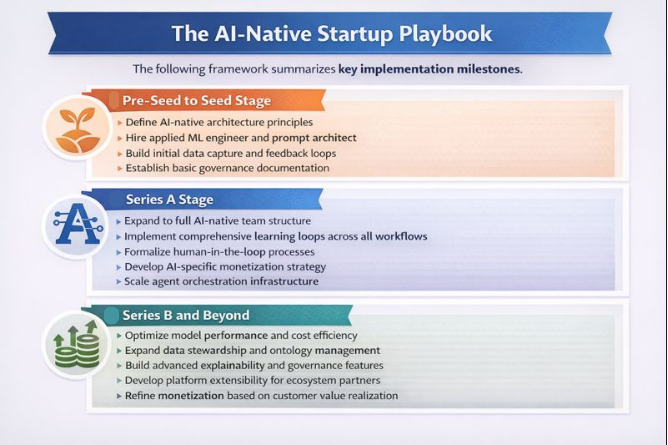

The AI-Native Startup Playbook

The following framework summarizes key implementation milestones:

Conclusion

AI-native companies will win not because they use AI but because their entire company compounds: data, feedback, accuracy, trust, and speed. They build faster, learn faster, and adapt faster. Founders who understand this will architect not just better products but better companies. Intelligence is the moat. The question is not “How do I add AI?” but “How do I build for intelligence from day zero?”

Disclaimer: This blog is intended for informational purposes only and does not constitute legal, tax, or accounting advice. You should consult your own tax advisor or counsel for advice tailored to your specific situation.

Hindol Datta is a seasoned finance executive with over 25 years of leadership experience across SaaS, cybersecurity, logistics, and digital marketing industries. He has served as CFO and VP of Finance in both public and private companies, leading $120M+ in fundraising and $150M+ in M&A transactions while driving predictive analytics and ERP transformations. Known for blending strategic foresight with operational discipline, he builds high-performing global finance organizations that enable scalable growth and data-driven decision-making.

AI-assisted insights, supplemented by 25 years of finance leadership experience.