Executive Summary

The generative AI revolution presents executives with a fundamental timing dilemma: whether to build now, partner strategically, or wait for market maturation. Unlike previous technology cycles, AI adoption does not follow predictable S-curves but instead exhibits volatile patterns of rapid advancement, operational complexity, and recalibration. This article examines three critical phases of AI adoption: the initial hype period characterized by inflated expectations and compressed timelines, the post-deployment discipline phase where operational reality meets strategic promise, and the compounding intelligence stage where sustainable competitive advantage emerges. Success requires understanding that AI represents not merely a tool but a learning asset requiring continuous investment in feedback loops, governance frameworks, and organizational capability. CFOs and boards must develop new evaluation criteria that measure not just usage metrics but business-adjacent outcomes including decision velocity, forecast accuracy improvement, and knowledge accumulation rates. Organizations that navigate these phases successfully treat AI as strategic capital requiring the same rigor applied to human talent and research investments, building systems that learn faster than competitors while maintaining explainability, adaptability, and alignment with core business objectives.

The Mirage and the Momentum

The AI economy moves at a pace that forces every executive to confront critical questions: Are we too early? Are we too late? Are we getting distracted by narrative rather than substance? My experience operating across organizational complexity from Series A startups to enterprise-grade digital transformation programs reveals that what moves quickly in press releases often moves messily in practice.

Background across finance, operations, analytics, and business intelligence demonstrates that adoption curves rarely follow clean trajectories. They spike, they stall, they recalibrate. Nowhere is this pattern more evident than in generative AI. We are experiencing a period marked not by maturity but by volatility, requiring something many companies struggle with: strategic pacing.

The current phase of GenAI adoption shows clear signs of peaking. Investment flows faster than infrastructure development. Pilots receive approval without purpose-built governance. Agent-based systems layer into workflows faster than integration allows. Beneath this activity lies mounting uncertainty about what proves real, what proves repeatable, and what will compound into strategic advantage.

Strategic Timing: The Cost of Being Early

Being early without leverage creates strategic debt. Organizations burn resources, expose customers to immature technology, and accumulate technical complexity requiring later replacement. In AI, the cost of premature adoption proves especially high because model behavior evolves rapidly, creating maintenance overhead, vendors lock organizations into immature tooling ecosystems, and early use cases often lack rigorous cost-benefit discipline.

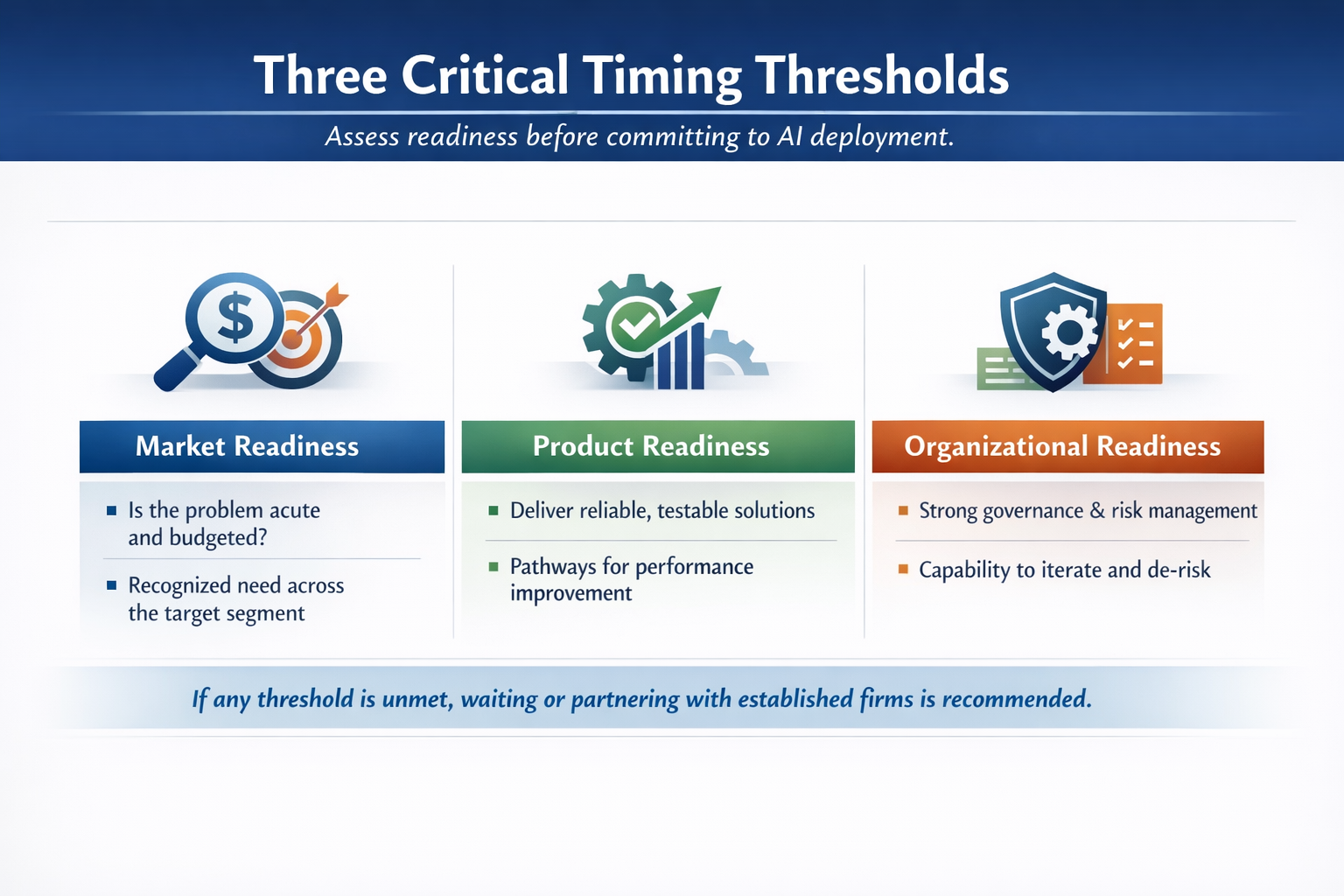

Three Critical Timing Thresholds

Organizations should evaluate readiness across three dimensions before committing to AI deployment:

Signals of Substance Versus Signals of Hype

Boards must develop literacy to distinguish between market momentum and genuine maturity:

| Assessment Dimension | Signal of Hype | Signal of Substance |

| Technical Understanding | Team mentions LLMs without articulating training data provenance or model constraints | Team has instrumented feedback loops, documented performance thresholds, and data privacy roadmap |

| Progress Measurement | Press releases outpace pilot results and validated learnings | AI roadmap ties to quarterly learning goals rather than static feature releases |

| Integration Approach | Bolt-on implementations without middleware or governance layers | Clear orchestration architecture mediating agent access to enterprise systems |

| Metrics Focus | Vanity metrics like queries submitted or tokens generated | Business-adjacent metrics including decision velocity and accuracy improvements |

| Cost Transparency | Vague understanding of total cost of ownership including maintenance | Detailed cost stack covering API usage, training hours, QA loops, and incident response |

The Role of the CFO: Capital Allocation Under Uncertainty

As a finance leader, I view hype cycles through the prism of capital efficiency rather than technological novelty. Every dollar deployed into AI experimentation must connect to future compound returns: better decisions, faster learning, reduced friction, or enhanced defensibility.

AI Investment Framework

CFOs should build distinct ROI frameworks for AI investments:

- Time to Feedback: How quickly does the organization learn from each iteration? Rapid feedback loops enable faster refinement and reduce the cost of error correction.

- Model Confidence: What margin of error exists and what override rates do human reviewers maintain? High override rates signal that models require fundamental rethinking.

- Data Leverage: Are we making proprietary data work harder by extracting insights unavailable to competitors? Data advantage compounds when AI systems discover patterns human analysis misses.

- Elasticity: Can this system scale without human headcount scaling linearly? True leverage emerges when AI enables nonlinear growth in capability relative to resource investment.

These metrics differ from standard investment evaluation because AI represents a nonstandard asset class requiring new vocabulary of judgment.

From Deployment to Discipline: The Post-Honeymoon Reality

What begins with euphoria and media attention eventually confronts operational complexity, cost scrutiny, leadership skepticism, and rising user expectations. This post-hype plateau requires AI to prove it deserves permanent strategic positioning. Boards shift from asking why the organization is not using GenAI to questioning why AI is not yet driving margin improvement.

By the time initial momentum settles, most AI initiatives resemble middleware more than magic. Early wins including automated summaries and faster financial closes blend into business operations. Meanwhile, hidden costs accumulate: model maintenance, data labeling, user retraining, latency issues, and continuous prompt refinement.

Critical Board Questions for the Discipline Phase

- Is the AI deployment self-sustaining, or does it require permanent human intervention to maintain performance?

- Are KPIs aligned to business outcomes including revenue impact and margin improvement, or just to technical output metrics?

- What organizational capabilities have we actually built, or did we simply purchase tools and wrap them in our branding?

- How do intervention rates, prompt churn, and feedback lag compare to initial projections and industry benchmarks?

AI as Learning Engine, Not Cost Saver

A fundamental mistake organizations make post-hype involves expecting AI to function as perpetual cost-cutting engine. GenAI requires human feedback to remain useful. It represents a learning engine rather than an end-state system. When boards assume GenAI offers plug-and-play implementation, disappointment becomes inevitable.

After deploying agents to accelerate revenue recognition and automate accrual commentary, organizations often discover that variance narratives remain inconsistent. The agents summarize data but lack contextual understanding. What emerges is need for new hybrid roles combining controller expertise, prompt engineering capability, and business partnership skills. This costs money. But when executed properly, it creates durable leverage.

The right question shifts from how much can be saved to how much faster and more accurately can the organization learn. Enterprises that frame GenAI around learning loops rather than cost reduction unlock greater organizational returns because agent value compounds over time as it ingests feedback.

Key Insight: Maximum value extraction occurs when

organizations invest through all three phases rather

than expecting immediate returns from Phase 1 alone.

Building the Operating Layer: From Tools to Systems

The shift from pilot to platform requires architecture. AI becomes sustainable only when connected to enterprise fabric as an integrated layer that communicates with source systems, human workflows, and compliance logic rather than as bolt-on functionality.

Many startups deploy AI agents into Slack, Notion, or Chrome extensions expecting consistent ROI. However, these interfaces often lack context integrity. What organizations need is middle layer architecture: a business logic interpreter sitting between agents and enterprise systems.

Orchestration Layer Components

Effective AI orchestration layers provide several critical functions:

- Real-time data governance ensuring context windows remain clean and relevant

- Document access control verifying that agent responses reflect appropriate permissions

- Response scoping preventing information leakage beyond authorized boundaries

- Audit trail generation creating compliance documentation for agent interactions

- Performance monitoring tracking accuracy, latency, and user satisfaction metrics

Companies solving this challenge build or adopt thin orchestration layers mediating agent access to ERP, CRM, and file repositories. Without this architecture, agents hallucinate or leak sensitive information. With it, agents become trustworthy copilots integrated into business operations.

Metrics That Matter: From Latency to Leverage

In the hype phase, AI metrics skew toward usage including queries per day or prompts submitted. These represent vanity indicators. In the post-hype discipline phase, what matters are business-adjacent metrics directly linking AI effort to business velocity and accuracy.

Business-Adjacent AI Metrics

- Agent-accelerated decisions per week measuring how frequently AI recommendations influence actual business choices

- Human override rates on AI suggestions indicating confidence in model outputs and areas requiring improvement

- Cross-functional latency improvement tracking time reduction in processes like contract negotiation or variance explanation

- Forecast deviation with versus without agent participation quantifying accuracy enhancement from AI augmentation

In one portfolio company, the finance team tracked forecast error differences between human-only models and agent-augmented approaches. Over two quarters, they demonstrated twenty-three percent reduction in error with fewer hours worked. That represents not a model statistic but a board-level performance indicator.

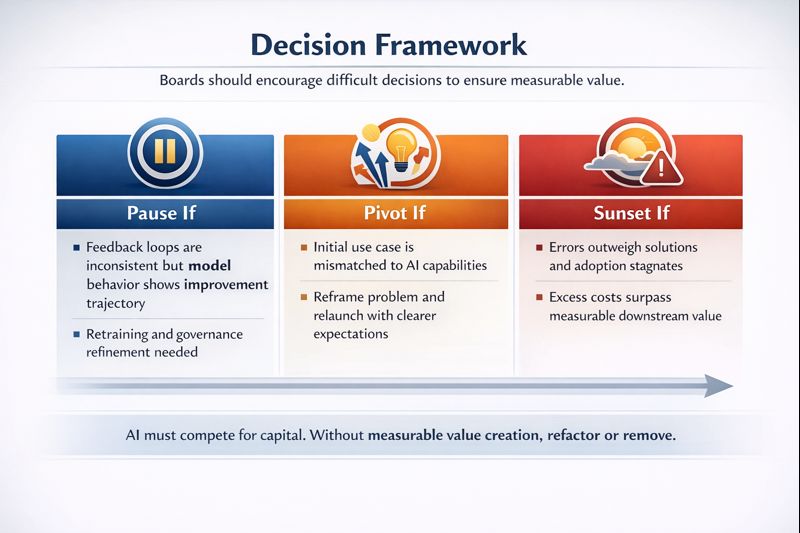

When to Pause, Pivot, or Sunset

Not every AI deployment survives the discipline phase. What separates high-functioning companies from laggards is willingness to sunset or re-scope with clarity and speed.

Decision Framework

Boards should encourage these difficult decisions. AI, like any capability, must compete for capital. If it is not earning its place through measurable value creation, the answer is not more investment but refactoring or removal.

AI’s Invisible Cost Structure

Fine-tuning models, retraining on updated data, prompt engineering, latency debugging, and compliance testing all consume human and compute resources. These costs rarely appear as distinct P&L line items.

AI Cost Stack Components

- Vendor API usage including token consumption and model access fees

- Agent training hours representing human time investment in prompt refinement

- Quality assurance and feedback loops encompassing validation processes

- Prompt library maintenance involving documentation and optimization efforts

- Incident response addressing model drift or misbehavior

- Data preparation and labeling ensuring training data quality

- Infrastructure and compute supporting model hosting and inference operations

This cost stack proves essential to determining true ROI and should receive quarterly updates. Without comprehensive cost tracking, organizations cannot accurately assess whether AI investments generate positive returns.

Compounding Through Intelligence: Strategic Differentiation

Once hype fades and initial deployments mature, companies that truly capitalize on AI opportunity are not those building the most features. They are organizations building the most resilient learning loops, refining intelligence at scale, and anchoring AI into core strategic models.

AI as Capital Asset

Treating AI as capital rather than code means measuring its returns like any other balance sheet asset. From financial perspective, we are entering an era where AI systems should sit alongside human capital and research as long-term assets. The ability of AI systems to generate decision-ready insights, reduce reaction time, and model uncertainty becomes strategic capability.

- Knowledge accumulation rate measuring whether your agents learn faster than competitors

- Organizational dependency assessing how many critical decisions rely on AI-augmented pathways

- Model attribution linking AI recommendations to outcome improvements including higher margins

- Competitive moat depth evaluating whether AI capabilities create sustainable advantages

Boards need to demand these analytics rather than simple usage dashboards that measure activity without assessing strategic impact.

From Linear Workflows to Nonlinear Intelligence

Most companies treat processes as linear workflows where input X flows through steps Y and Z to produce output. However, AI does not think linearly. It reasons probabilistically, maps relationships, and generates hypotheses.

Nonlinear AI Advantages

- Revenue operations where agents surface counterfactuals examining what would happen if marketing touchpoints changed

- Procurement where agents optimize vendor portfolios based on cost, lead time, and ESG impact simultaneously

- Financial planning where agents simulate fiscal scenarios and test budget elasticity under real-time stress conditions

- Product development where agents identify feature combinations that drive engagement

These represent nonlinear advantages depending on orchestration rather than simple execution. They demand mindset shift from building workflows to designing systems of learning.

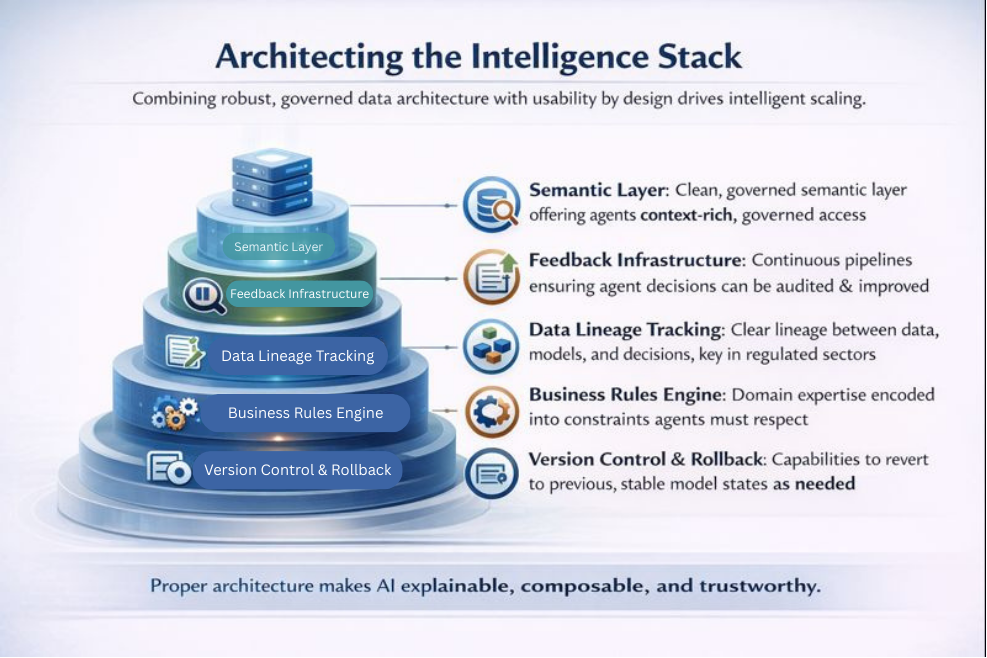

Architecting the Intelligence Stack

In companies I have advised or led, one factor consistently distinguishes fast scalers from laggards: their data architecture was not just robust but usable by design.

Without this foundation, AI becomes black box technology. With proper architecture, AI becomes explainable, composable, and trustworthy.

Talent Strategy: Training the AI-Adjacent Organization

The most critical roles emerging now are AI-adjacent: finance analysts who prompt effectively, product managers who understand token limits, controllers who can debug prompt drift, and legal teams who can red-team AI behavior for compliance risks.

AI-Adjacent Competencies

- Prompt engineering proficiency enabling team members to extract maximum value from AI systems

- Model limitations awareness understanding when AI recommendations should be trusted versus escalated

- Feedback quality providing structured input that improves model performance

- Cross-functional translation connecting technical AI capabilities with business problems

- Ethical reasoning identifying scenarios where AI deployment raises fairness or privacy concerns

The Board’s Role: Stewarding Strategic Intelligence

AI maturity now constitutes board-level responsibility. Directors must move beyond curiosity into accountability, asking how AI embeds into planning rather than just execution, requiring AI risk disclosures covering data drift and security vulnerabilities, demanding clear audit trails of decisions influenced by agents, and enforcing oversight for hallucination risk and model degradation.

Quarterly Board AI Assessment Questions:

- How has model performance changed relative to baseline, and what interventions improved or degraded accuracy?

- What percentage of critical business decisions now incorporate AI recommendations?

- What incidents occurred involving AI misbehavior, and what process improvements resulted?

- How do our AI capabilities compare to competitors, and where do we possess unique advantages?

- What regulatory developments could impact our AI deployment strategy?

AI Compounding: The Final Test of Differentiation

Companies will be valued not on whether they use AI but on how their AI improves over time. Compounding intelligence represents the final differentiator, requiring feedback loops that shorten with scale, models that fine-tune to proprietary data signals, and users who become co-teachers rather than just consumers.

I have witnessed this transformation across industries: in AdTech where agents learned audience response curves in days rather than months, in logistics where dispatch models adjusted dynamically to weather and labor constraints, and in SaaS where go-to-market agents optimized renewal language based on ideal customer profile nuances. None of these wins came from simply deploying AI. They came from investing in intelligence loops that compound over time.

Conclusion

The AI hype cycle will continue generating new models and metaphors. However, companies that win will stop treating AI as magic and start treating it as discipline. That discipline looks different depending on organizational stage, sector, and size, but core principles hold consistently. Build what you can refine through systematic feedback. Partner where you cannot scale independently. Wait when infrastructure lags capability requirements. Design for learning because in a world of agents, companies who learn fastest win. AI represents just the latest ingredient in organizational capability. Your culture of precision, iteration, and strategic patience represents the real differentiator. The future is not just automated but intelligent. Build accordingly with conviction tempered by governance clarity that transforms hype into sustainable competitive advantage.

Disclaimer: This blog is intended for informational purposes only and does not constitute legal, tax, or accounting advice. You should consult your own tax advisor or counsel for advice tailored to your specific situation.

Hindol Datta is a seasoned finance executive with over 25 years of leadership experience across SaaS, cybersecurity, logistics, and digital marketing industries. He has served as CFO and VP of Finance in both public and private companies, leading $120M+ in fundraising and $150M+ in M&A transactions while driving predictive analytics and ERP transformations. Known for blending strategic foresight with operational discipline, he builds high-performing global finance organizations that enable scalable growth and data-driven decision-making.

AI-assisted insights, supplemented by 25 years of finance leadership experience.