Executive Summary

Having evaluated high-growth companies over the past three decades, from early SaaS disruptors and data-rich logistics platforms to vertical AI tools in healthcare and compliance, I can confidently say that traditional valuation frameworks are straining under the weight of the GenAI wave. Discounted cash flow (DCF) models remain the spreadsheet workhorse, and public comps are still the go-to shortcut. But both falter in capturing the core economic driver of today’s most innovative AI startups: compounding cognition. This is not just a theoretical shortcoming. It affects how capital is priced, how investors frame upside, and how boards justify strategic investment. The issue is simple: traditional models are built to evaluate execution businesses, not learning systems. And generative AI startups, at their core, are systems that learn, adapt, and improve not by hiring more people but by deepening models and data advantage. To value AI-native companies correctly, we must go beyond margin multiples and revenue waterfalls. We must begin treating intelligence, contextual, evolving, and proprietary, as an asset class in itself.

Why Traditional DCF Struggles with AI Startups

The standard DCF model assumes that future value flows from cash generated by operations, discounted back at a risk-adjusted rate. But in GenAI startups, future value often flows from usage-driven learning, data compounding, and platform extensibility, none of which map neatly to revenue or EBITDA projections.

At one gaming enterprise where I led global financial planning and controllership, we evaluated AI-enhanced player retention systems. DCF captured the monetized usage but not the compound learning from new players or the platform pull from integration partners.

AI startups may burn cash longer, not because they are inefficient but because they are training. That is R&D as capital, not cost. Traditional DCF models penalize that when in reality it is cognitive asset accumulation.

Comp-Based Valuation: A Mirage in a Hype Market

When metrics are immature or cash flows are negative, investors fall back on public or private comparables. In GenAI, that is dangerous. Most comparables are either too early with inflated valuations or not truly comparable.

Valuing an AI-powered compliance engine by comparing it to traditional SaaS companies ignores the core differentiator: defensibility comes not from distribution but from domain-specific data, agent design, and reinforcement learning loops.

At one professional services organization where I built enterprise KPI frameworks, investors applied sales efficiency multiples while ignoring that the model improved with every customer onboarded. The marginal value per user increased over time.

Comps are useful as benchmarks but obscure where GenAI value is created: not in users acquired but in knowledge synthesized.

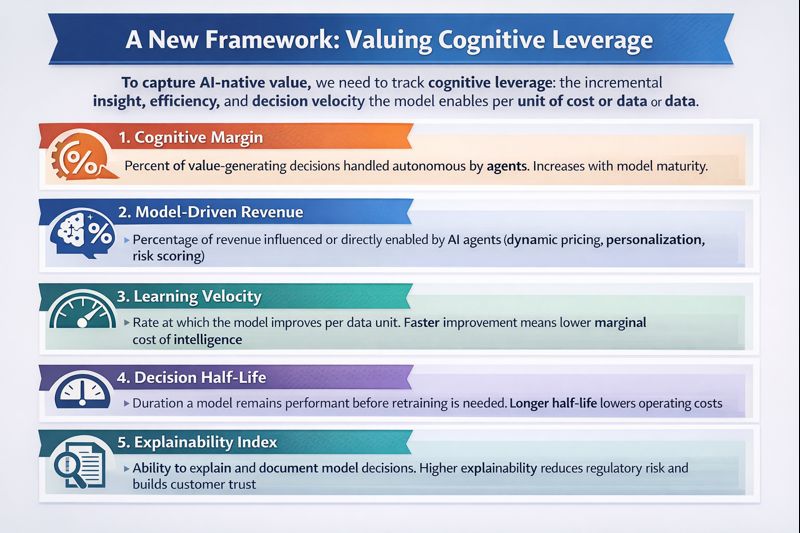

A New Framework: Valuing Cognitive Leverage

To capture AI-native value, we need a model that tracks not just cash flow but cognitive leverage: how much incremental insight, efficiency, or decision velocity the model enables per unit of cost or data.

Here are five metrics I now use in AI startup valuations:

- Cognitive Margin: Measures the percent of value-generating decisions handled autonomously by agents. If sixty percent of underwriting, pricing, or forecasting is machine-initiated and accurate, the business has leverage. This margin is non-linear and increases with model maturity.

- Model-Driven Revenue: Quantifies the percentage of revenue influenced or directly enabled by AI agents. This includes dynamic pricing, personalization, risk scoring, or routing optimization. High model-driven revenue signals structural defensibility.

- Learning Velocity: Tracks how fast the model improves per data unit. Startups that improve agent performance by ten percent for every one thousand new interactions compound faster and have lower marginal cost of intelligence.

- Decision Half-Life: Measures how long a model remains performant before retraining is required. Short half-lives indicate technical fragility. Longer half-lives reflect durability of learned behavior and lower operating cost.

- Explainability Index: Captures the startup’s ability to explain, trace, and document agent decisions. Investors will soon value this the way they do security posture. High explainability lowers regulatory risk and improves customer trust.

Agent Performance Metrics

If the startup deploys agents for forecasting, legal triage, or vendor scoring, track:

- Agent Override Rate: How often do humans intervene in AI-driven decisions?

- Agent Drift Rate: How frequently does performance degrade without retraining?

- Agent Time-to-Adapt: How long does it take for the system to learn a new domain?

- Agent-to-Analyst Ratio: How many traditional tasks are now completed autonomously?

These are operational proxies for intelligence scalability. At a digital marketing organization that scaled from nine million to one hundred eighty million in revenue, we implemented AI-powered campaign optimization that progressively reduced human intervention from seventy percent to twenty percent over eighteen months.

Case Study: Forecasting AI in a B2B SaaS Company

At organizations where I led FP&A and board reporting, we explored AI-augmented forecasting systems. One implementation replaced traditional forecasting with an AI agent trained on sales motion, product usage, and marketing signal. The model reduced forecasting variance by forty percent and freed up fifteen percent of finance team hours.

Under traditional valuation, that impact would be invisible, labeled as opex reduction. But we modeled it as compounding systems advantage: lower planning cycle cost, faster reallocation decisions, and higher strategic optionality.

We assigned value using replacement cost of building the model, discounted value of planning efficiencies, and revenue uplift from improved decision velocity. The result was a twelve percent uplift in internal valuation.

The Role of Explainability in Value Creation

In high-stakes sectors including finance, healthcare, and compliance, models that explain are more valuable than those that simply predict. Black box models may perform but cannot defend themselves. Transparent models do both.

At one education nonprofit where I secured forty million in Series B funding, we evaluated AI-powered program matching systems. The key value driver was not matching accuracy but the system’s ability to explain recommendations and surface similar cases. This allowed program managers to trust the system and reduced compliance risk.

Explainability is not just a feature but a valuation multiplier. It reduces sales friction, speeds compliance review, and unlocks regulated markets.

Boards Must Begin Asking Smarter Questions

CFOs and boards assessing AI startups must evolve their playbook.

Instead of “How fast are you growing?” ask “How does your model improve with growth?”

Instead of “What is your gross margin?” ask “What is your cognitive margin?”

Instead of “What is your LTV to CAC ratio?” ask “How does LTV improve as the model learns across customers?”

These questions reframe AI startups not as operational bets but as learning machines with economic agency.

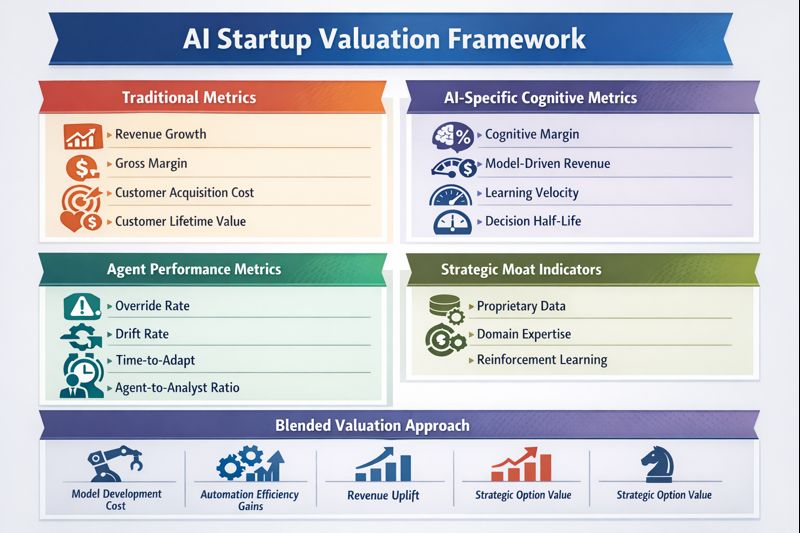

AI Startup Valuation Framework

The following framework integrates traditional and AI-specific metrics for comprehensive AI startup valuation:

Traditional Metrics: Revenue growth, gross margin, customer acquisition cost, and customer lifetime value provide baseline operational health indicators.

AI-Specific Cognitive Metrics: Cognitive margin measures autonomous decision-making percentage. Model-driven revenue quantifies AI-enabled revenue contribution. Learning velocity tracks improvement rate. Decision half-life measures model durability. Explainability index assesses transparency.

Agent Performance Metrics: Agent override rate indicates autonomy level. Agent drift rate measures stability. Agent time-to-adapt shows flexibility. Agent-to-analyst ratio demonstrates leverage.

Strategic Moat Indicators: Proprietary training data quantifies data advantage. Domain-specific model tuning captures specialization. Reinforcement learning loops demonstrate compounding improvement.

Blended Valuation Approach: Combines replacement cost analysis for model development, discounted efficiency gains from automation, revenue uplift from improved performance, and strategic option value from platform extensibility.

Conclusion

Traditional valuation tools still matter. But when applied without adjustment, they undervalue what AI startups truly offer: systemic leverage, compounding insight, and speed of iteration. As capital becomes more selective, founders must be prepared to explain value not just in revenue terms but in cognitive terms. And CFOs must become interpreters not just of financials but of intelligence architecture. The next generation of market leaders will not be the ones with the most revenue but the ones with the best models, clearest agents, and most transparent governance.

Disclaimer: This blog is intended for informational purposes only and does not constitute legal, tax, or accounting advice. You should consult your own tax advisor or counsel for advice tailored to your specific situation.

Hindol Datta is a seasoned finance executive with over 25 years of leadership experience across SaaS, cybersecurity, logistics, and digital marketing industries. He has served as CFO and VP of Finance in both public and private companies, leading $120M+ in fundraising and $150M+ in M&A transactions while driving predictive analytics and ERP transformations. Known for blending strategic foresight with operational discipline, he builds high-performing global finance organizations that enable scalable growth and data-driven decision-making.

AI-assisted insights, supplemented by 25 years of finance leadership experience.