Executive Summary

In three decades of working with companies across software as a service, freight logistics, education technology, nonprofit, and professional services, I have seen firsthand how every operational breakthrough begins with a design choice. Early enterprise resource planning implementations promised visibility. Cloud migration promised scale. Now, we enter a new phase where enterprises do not just automate processes, they delegate judgment. Having implemented enterprise resource planning systems including NetSuite and Oracle Financials, designed business intelligence architectures, and built operational frameworks across organizations that scaled from nine million to one hundred eighty million dollars in revenue, I have learned that technology success depends on architectural thinking. The rise of autonomous artificial intelligence agents, decision-making systems embedded within workflows, is forcing companies to confront a fundamental question: What does a self-steering enterprise look like? This article explores the blueprint for how artificial intelligence agents will become a layer of the enterprise stack, including roles, hierarchies, error loops, and the governance frameworks required to make autonomous systems trustworthy and effective.

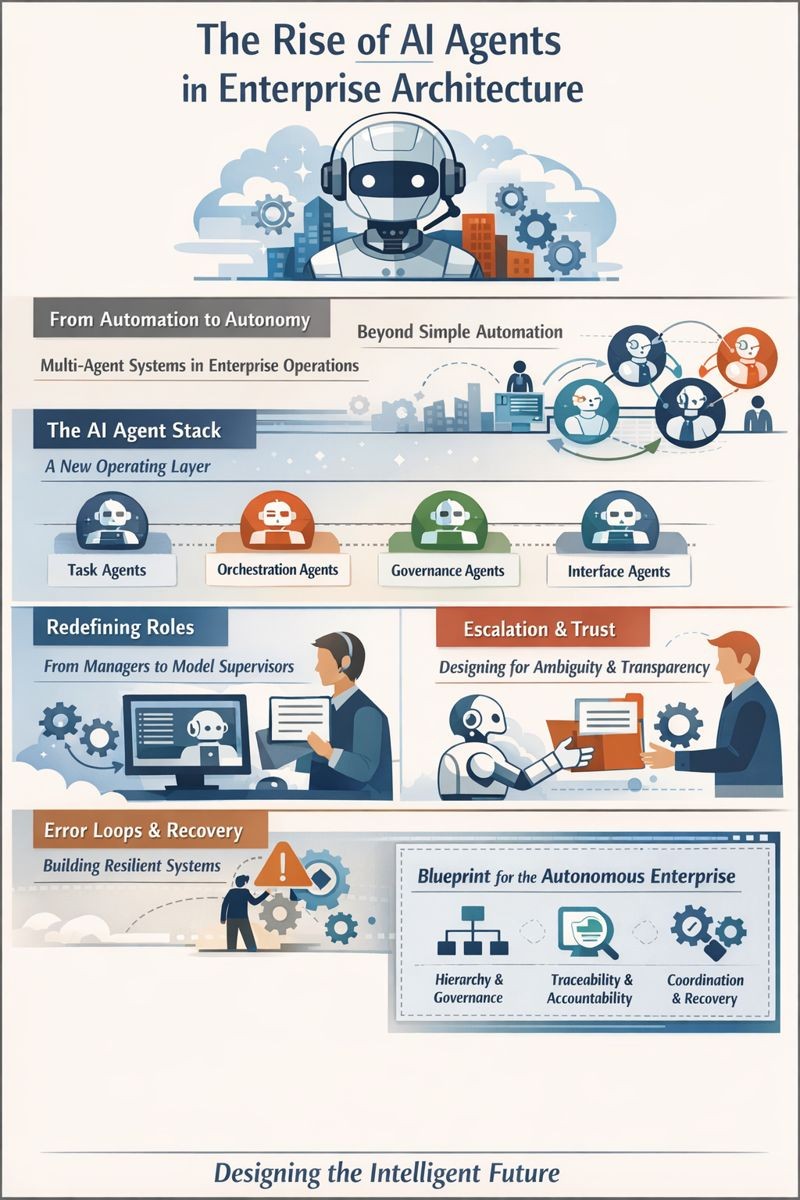

From Automation to Autonomy: The New Enterprise Layer

We are not talking about automation in the narrow sense of bots that click, sort, and file. We are talking about multi-agent systems, autonomous entities trained to reason, escalate, and learn, embedded in revenue operations, compliance, finance, procurement, and customer interaction. These agents do not merely follow logic trees. They operate with context. They perform functions that once required analysts, managers, and operations leads. They make decisions on your behalf.

The implications for enterprise design are profound. An architecture where humans and agents coexist must solve for hierarchy, interoperability, accountability, and trust. The autonomous enterprise will not emerge by stitching tools together. It will require a blueprint, a reimagination of systems, roles, and error recovery. My project management certification and experience leading implementations across multiple organizations taught me that sustainable transformation requires intentional architecture, not incremental tool adoption.

The AI Agent Stack: A New Operating System

Just as companies once layered customer relationship management on top of sales or human resources information systems over people operations, we are now entering an era where artificial intelligence agents form a cognitive layer across the enterprise. This layer does not replace enterprise resource planning, customer relationship management, or business intelligence tools. It orchestrates them.

In a logistics company where I managed supply chain analytics for a one hundred twenty million dollar enterprise, reducing logistics cost per unit by twenty-two percent, we deployed a multi-agent system that monitored shipping costs, demand volatility, and service level agreement compliance. One agent forecasted volume. Another recommended pricing changes. A third adjusted partner allocations based on reliability scores. None of these agents were isolated. They coordinated, negotiated, and escalated.

This layered system, what I now call the autonomous stack, includes four key components. Task agents are specialized for discrete workflows including forecasting, invoice reconciliation, and contract flagging. Orchestration agents are meta-level systems that manage workflows across task agents, sequence dependencies, and ensure prioritization. Governance agents are watchdog systems that track agent behavior, decision logs, exception rates, and escalation patterns. Interface agents serve as the human-agent bridge, summarizing decisions, flagging anomalies, and allowing overrides.

The success of this architecture depends not just on model quality but on how these agents interact, escalate, and recover. This is the true challenge of enterprise artificial intelligence. Coordination, not capability, defines success. My certification in production and inventory management emphasizes this systems perspective. Manufacturing operations require coordination across demand forecasting, production scheduling, inventory positioning, and supplier management. No single component optimizes the whole. Success requires orchestration across interdependent systems. The same principle applies to autonomous agent architecture.

Redefining Roles: From Managers to Model Supervisors

With agents taking over operational workflows, human roles must shift. The manager of tomorrow is not a task assigner. They are a model supervisor, someone who monitors agent behavior, fine-tunes training data, reviews decision logs, and acts as an escalation point for unresolved ambiguity. In a professional services firm, we deployed a time entry validation agent that cross-checked submitted hours against project charters and budget thresholds. Initially, the agent raised too many false flags. The team assigned a controller, a person trained in prompt design, logic validation, and pattern review. Within weeks, false positives dropped by sixty percent. The agent learned. The controller’s role evolved into artificial intelligence stewardship.

This is not a theoretical role. It is a design imperative. Every agent requires a human fallback path, a named individual or team who owns the domain, monitors behavior, and retrains the system when edge cases emerge. Having built finance teams across cybersecurity, software as a service, digital marketing, gaming, logistics, manufacturing, and education sectors, I learned that accountability determines execution quality. Clear ownership drives performance. Ambiguous responsibility creates gaps. The same principle applies to agent oversight.

Escalation Logic: Designing for Ambiguity

Autonomous systems thrive in structured environments. But ambiguity is a feature of enterprise life. A purchase order with mismatched terms, a billing dispute, a policy exception, all trigger the need for escalation. The best autonomous architectures do not suppress ambiguity. They design for it. Each agent must be given confidence thresholds. When confidence falls below a defined level, the agent escalates to a human, not with a question but with a case file: data, context, options, and likely outcomes. The human then resolves and returns the resolution, which becomes new training data.

In an education technology firm, we built a finance agent that reviewed vendor payments. When faced with ambiguous tax classifications, it did not guess. It flagged, explained the decision gap, and requested a policy update. Over time, this feedback loop reduced exception rates while improving policy precision. Escalation is not a failure mode. It is a learning mode. When embedded into architecture, it becomes a source of resilience.

My background as a Certified Internal Auditor informs this perspective. Internal control frameworks include exception handling and escalation protocols. When automated controls identify anomalies, they escalate to human review. When human review identifies control weaknesses, they feed back into control design. This continuous improvement cycle is foundational to effective control environments. Autonomous agent architectures must incorporate the same discipline.

Trust and Transparency: Making the Invisible Visible

The greatest barrier to adoption in autonomous systems is not performance. It is opacity. Boards, CFOs, legal counsel, and frontline employees all ask the same question: How did the agent arrive at that decision? The answer lies in decision traceability. Every autonomous agent must produce a log: inputs, model pathway, thresholds met, policies triggered, and the final action taken. These logs are not just for compliance. They are for confidence.

In a nonprofit where I served as CFO, the autonomous grant review agent produced a decision card for every action. It listed the logic, risk flags, and supporting data. Reviewers could override or approve with a click. This created a culture of collaboration between human and agent, not dependency or distrust. Transparency is not a user interface feature. It is an architectural principle. Trust compounds when systems explain themselves.

Having implemented Sarbanes-Oxley controls and managed audit relationships across organizations including a public gaming company, I learned that auditability requires documentation. Auditors must be able to trace from transaction to control to approval to accounting treatment. If any link is missing, the control is ineffective. Autonomous agent systems must provide the same traceability. Every decision must be traceable from input through logic to output, with clear documentation of thresholds, policies, and escalations.

Error Loops and Systemic Recovery

All systems fail. The question is whether they recover intelligently. In autonomous enterprises, we must distinguish between local errors, a single agent failing to complete a task, and systemic errors, a cascade triggered by flawed agent assumptions. This requires two safeguards. First, autonomous monitoring agents designed to spot pattern anomalies such as sudden drops in forecast accuracy or increase in override frequency. Second, incident response playbooks, predefined recovery paths that define when to roll back, retrain, or override models across the stack.

In a software as a service company, a pricing optimization agent triggered an unexpected churn spike in a low-volume segment. The governance agent flagged an anomaly. The system auto-escalated. Human supervisors reviewed the pattern, adjusted the weighting of recent data, and retrained the model. The system self-healed within forty-eight hours. That agility is only possible when recovery is designed in advance.

Having managed financial planning and analysis through multiple economic cycles and business disruptions, I learned that resilience requires preparation. You cannot design recovery protocols during a crisis. You must design them in advance, test them regularly, and refine them continuously. The same principle applies to autonomous agent systems. Error handling must be designed into the architecture, not added after failures occur.

From Workflows to Decision Flows

Most enterprise systems are designed around workflows, sequences of tasks completed by systems and people. But autonomous enterprises operate on decision flows. The question becomes: What decisions are being made, by whom, with what confidence, and what outcome? That is the CFO’s new visibility requirement. Not just how long did the close take but how many agent decisions were made, how many required escalation, and how many were corrected?

In one multinational organization, we tracked decision flow analytics and built a heatmap of friction. It showed where agents struggled, where humans overrode too frequently, and where process ambiguity needed design. That heatmap became our product roadmap. Having designed enterprise key performance indicator frameworks using business intelligence tools including MicroStrategy and Domo, I learned that measurement shapes improvement. When we track the right metrics, we optimize the right outcomes.

Conclusion: Designing With Responsibility

Autonomy is not a destination. It is a design choice. The companies that succeed will not be the ones that deploy the most agents. They will be the ones that design the clearest systems of accountability, oversight, and coordination. We must build enterprises that do not just scale output but scale judgment. That do not just eliminate work but elevate insight.

The autonomous enterprise is not science fiction. It is already emerging, one agent at a time, one recovery loop at a time, one design decision at a time. Based on thirty years of financial leadership, operational execution, and strategic advisory across diverse sectors and situations, from startups to public companies, from high growth to operational optimization, I can attest that the organizations that master autonomous agent architecture will create competitive advantages that compound over time. Those that treat agents as point solutions rather than architectural layers will struggle with coordination, governance, and trust.

The future of enterprise architecture is autonomous. But autonomy without architecture is chaos. The CFOs, chief operating officers, and technology leaders who design thoughtfully, govern rigorously, and orchestrate systematically will build enterprises that are not just faster or cheaper but fundamentally more intelligent. The blueprint is emerging. The question is whether your organization will design it or be disrupted by those who do.

Disclaimer: This blog is intended for informational purposes only and does not constitute legal, tax, or accounting advice. You should consult your own tax advisor or counsel for advice tailored to your specific situation.

Hindol Datta is a seasoned finance executive with over 25 years of leadership experience across SaaS, cybersecurity, logistics, and digital marketing industries. He has served as CFO and VP of Finance in both public and private companies, leading $120M+ in fundraising and $150M+ in M&A transactions while driving predictive analytics and ERP transformations. Known for blending strategic foresight with operational discipline, he builds high-performing global finance organizations that enable scalable growth and data-driven decision-making.

AI-assisted insights, supplemented by 25 years of finance leadership experience.